Custom Speech Datasets for AI: High-Quality Audio & Transcriptions

OTS and specific speech dataset collection and processing to improve Speech Models

Pangeanic provides end-to-end Speech Dataset solutions for global enterprises. Leveraging our proprietary PECAT™ platform, we deliver high-accuracy, human-annotated audio data in 200+ languages and dialects. Whether you need ASR training data for low-resource dialects or TTS corpora with full IP ownership, our datasets are built for privacy, diversity, and performance.

Related services

The PECAT Advantage: Workflow and Quality Control

PECAT is our speech data collection and annotation platform for multilingual AI training. Thanks to the PECAT platform and mobile phone apps, Pangeanic has created a full Speech Data Collection Platform designed to increase the language coverage and accuracy of both speech recognition systems and speech to text systems.

As we humans interface more and more with machines, and with the growing needs of an aging population, speech dataset becomes a crucial component of Artificial Intelligence (AI) systems. With the rise of Natural Language Processing (NLP) technologies, speech recognition has become increasingly important for a wide range of applications, including voice assistants, language translation, and transcription.

With Pangeanic, not only can you create, manage and edit datasets hassle-free online with an easy drag-and-drop UI, but you can also monitor how our taskers are performing and recordings are progressing.

All speech data will be exhaustively cleansed and annotated as per your requirements so that your algorithms grow as accurate, as strong and wise as you want!

Visit our page on Speech Data Annotation for more information on annotation.

Who Uses Our Speech Datasets?

Our speech datasets, transcription and annotation workflows have supported multilingual AI deployments at major AI companies and well-known apps across the world, European agencies, and regulated enterprise environments requiring secure and sovereign AI infrastructure.

-

AI companies developing the future of conversational AI

-

Regulated enterprise environments

-

Multilingual government deployments ( Spanish Parliament)

-

Valencian Parliament (Les Corts, Spain)

-

European public sector institutions

This is how we process, label, classify and annotate speech data with PECAT

|

|

|

What is Speech Data?

Speech datasets for AI are curated collections of audio files and corresponding transcriptions used to train Automatic Speech Recognition (ASR), Text-to-Speech (TTS), and Natural Language Understanding (NLU) models. High-quality datasets must include diverse acoustic environments, a range of sampling rates (8kHz to 44.1kHz), and rich metadata.

Speech data can take many forms, including phone conversations, recorded interviews, podcasts, and more. Just as we have done for parallel corpora in machine translation systems, we at Pangeanic are building speech datasets. Sometimes spontaneous speech, sometimes reading our text from parallel corpora.

Speech Datasets for ASR, Voicebots and LLM-Based Speech Applications

Our multilingual speech datasets are used to train and fine-tune:

-

Automatic Speech Recognition (ASR) systems

-

Speech-to-Text (STT) engines

-

Text-to-Speech (TTS) voice models

-

Call-center voice analytics platforms

-

Automotive voice assistants

-

Multilingual LLM-based virtual agents

Key Benefits of Pangeanic Speech Datasets

-

Full IP transfer options

-

Ethical and consent-based data collection

-

Native taskers and demographic diversity

-

Quality assurance workflows

-

Metadata-rich annotation (accent, noise, sentiment)

-

GDPR-compliant European data collection

-

Secure private dataset delivery

Formats and Metadata

Our speech datasets are delivered in production-ready audio formats optimized for ASR, TTS and LLM-based speech pipelines.

We support:

| Feature | Specification / Options |

|

Audio file Formats |

WAV (Uncompressed), FLAC, MP3, NIST SPHERE |

|

Sampling Rates |

8kHz (Telephony), 16kHz (Standard ASR), 44.1kHz - 48kHz (High-Fidelity/TTS) |

|

Bit Depth |

16-bit, 24-bit, 32-bit float |

|

Channel Config |

Mono, Stereo, Multi-channel (Beamforming/Array) |

|

Metadata Fields |

Age, Gender, Dialect/Accent, Device ID, SNR (Signal-to-Noise Ratio), Transcription (Orthographic/Phonetic) |

|

Annotation Types |

Time-stamping (Word/Phoneme level), Speaker Diarization, Sentiment Labeling |

|

Compliance |

GDPR, CCPA, ISO 27001 (Data Security) |

|

Compression parameters |

Configurable depending on downstream model requirements |

This ensures compatibility with modern Automatic Speech Recognition (ASR), Speech-to-Text (STT), Text-to-Speech (TTS), and real-time voice analytics systems.

Structured Metadata for Robust Model Training

High-quality speech AI is not built on audio alone: it depends on structured, reliable metadata.

Each recording can be enriched with detailed, ML-ready metadata fields including:

Speaker Demographics

- Age range

- Gender

- Accent region / dialect

- Native vs. non-native speaker

- Geographical origin

These variables are critical for reducing demographic bias and improving generalization across multilingual deployments.

Recording Environment

- Environment type: Studio, office, home, public space, in-the-wild

- Channel type: Telephone, headset microphone, broadcast mic, mobile device

- Device model

- Room acoustics profile

- Signal-to-Noise Ratio (SNR)

Environmental labeling enables models to perform robustly under real-world conditions such as call-center audio, automotive assistants, and mobile voice interfaces.

Acoustic & Technical Attributes

- Audio duration

- Sampling rate

- Bitrate

- Compression level

- Noise class: traffic, crowd, office noise, background music, silence segments

These features allow ML engineers to stratify datasets and design domain-specific training subsets.

Linguistic & Contextual Tags

- Topic domain (finance, healthcare, government, customer support, etc.)

- Intent classification

- Sentiment / emotion labeling

- Named entities

- Code-switching markers (where applicable)

This metadata is particularly valuable for training LLM-based speech systems, multilingual voicebots, and conversational AI agents.

Metadata Matters for AI Training

Speech datasets without structured metadata constrain model optimization and reproducibility. Raw audio alone lacks the feature-level signals required to properly control training distribution, evaluate bias, and perform targeted fine-tuning. By systematically collecting, normalizing, and validating metadata at ingestion time, we enable dataset stratification across accent region, age, gender, channel type, acoustic environment, and signal-to-noise ratio (SNR).

This structured layer allows ML engineers to:

- Perform controlled domain adaptation experiments (e.g., telephony-only vs. broadcast audio)

- Improve accent and dialect generalization through balanced sampling

- Reduce hallucination and recognition drift in ASR pipelines by isolating noisy or low-SNR subsets

- Benchmark performance across demographic slices to detect bias early

- Build noise-robust acoustic models using environment-tagged data

- Accelerate fine-tuning cycles by dynamically filtering and re-weighting training batches

Metadata also supports reproducible evaluation by enabling fixed validation splits based on channel, region, or acoustic class — critical for regression testing during model updates.

In practice, metadata converts a collection of audio files into a controllable training corpus. It enables systematic experimentation, targeted error reduction, and production-grade model governance. Without it, speech AI systems remain probabilistic black boxes. With it, they become engineerable systems.

Ethical Data: How is Pangeanic Speech Data Collected?

We collect speech datasets for AI training in several ways. Mostly, we use our PECAT platform and apps to recruit individuals to record themselves speaking in response to specific text prompts that they can see in our apps.

We also collect spontaneous speech, which our team of internal transcribers in Europe and Japan converts into text.

Finally, we enter into agreements to buy small sections of pre-existing audio files that have been made publicly available, particularly in low-resource languages.

All our sets are fully consented, human-in-the-loop (HITL) collections; they provide GDPR-compliant audio anonymization and respect Diversity, Equity, and Inclusion (DEI) in speech sampling (to combat algorithmic bias).

Specialized Audio Collections for Advanced ML

To move beyond basic recognition, modern AI requires nuanced data. We provide specialized collections including:

- Spontaneous Speech Corpora: Unlike scripted reading, our corpora capture natural, unscripted human interaction—essential for training models to handle fillers, hesitations, and the "messiness" of real-world dialogue.

- Low-Resource Language Audio Data: We bridge the digital divide by sourcing high-quality speech data for under-represented languages and dialects, ensuring your AI remains inclusive and globally viable.

- Phonetically Balanced Sentences: Our datasets are meticulously designed to include all phonemes of a target language, providing the linguistic variety necessary for high-fidelity Text-to-Speech (TTS) synthesis.

- Wake-Word & Command Collection: We record thousands of variations of specific trigger words (e.g., "Hey Pangea") across diverse demographics to minimize "False Rejection" rates in voice-activated devices.

- Far-Field vs. Near-Field Audio: We provide data recorded at varying distances and acoustic environments to train models that can distinguish a speaker’s voice from 10 feet away or in a noisy moving vehicle.

Case Studies

Valencia's Regional Parliament

Pangeanic has applied its expertise to the Valencian Parliament (Les Corts Valencianes). This new initiative applies human-enhanced transcription and subtitling workflows to make legislative sessions more accessible to citizens and media outlets across Spain’s multilingual regions.

Spanish Parliament

In a revolutionary step towards modernizing parliamentary institutions, the Spanish Parliament awarded the AI transcription services contract to Pangeanic in 2025, marking the first time that artificial intelligence will be used in Spanish parliamentary transcriptions at the national level.

Comprehensive speech data processing

Pangeanic transformed over 2,000 hours of raw, multilingual audio into a high-quality training dataset. The project addressed significant challenges in audio quality and format by executing a multi-stage pipeline that included precise preprocessing and segmentation, manual transcription by specialized linguists, speaker diarization, named entity recognition, and the crucial step of personally identifiable information (PII) anonymization for regulatory compliance. All enriched data was compiled into a final JSON deliverable within four weeks, demonstrating the company's end-to-end capability in preparing complex, ethically-sound datasets for AI model training through a combination of proprietary technology (PECAT platform) and expert human oversight.

Need Speech Datasets? Pangeanic will make them for you

Speech to Text Datasets - Transcription

We've been the preferred transcription vendor for Valencia's Parliament, transcribing hundreds of hours of parliamentary sessions. Our mobile and desktop app allow our taskers to annotate content and context so your Natural Language Processing (NLP) technology improves. You’ll love our mobile app and PECAT platform for speech! We only deliver stock or made-to-order speech data at scale, of high quality and large volumes. Pangeanic offers a 3-month test guarantee and marks delivered content with the type of IP agreement. Pangeanic's speech datasets corpora are collected meticulously and revised - all work is guaranteed and of the highest quality, including labeling homonyms such as “I wrote a letter on the bat” meaning a veterinarian wrote a letter on the nocturnal bird-like animal, not the wooden object used to hit a baseball. Our annotators will consider such cases, domain and context to thwart any possible ambiguity. Banking on 20 years of translation services, Pangeanic is uniquely positioned when it comes to language services: starting from our initial translator database, we have expanded to add thousands of speech taskers worldwide, making sure that only native speakers annotate the text.

Text-to-Speech Datasets

Our recordings offer you full ownership and full copyright, both for the audio collected and for the transcription for ML training. Pangeanic follows processes so that Ethical AI is built into every step and you can be sure it is passed on to your products. Our customers enjoy a smooth relationship with a trustable vendor of text-to-speech services supplying Training Datasets to improve ASR performance, freeing you from the hassle of generating, collecting, and processing audio, whilst adding valuable metadata.

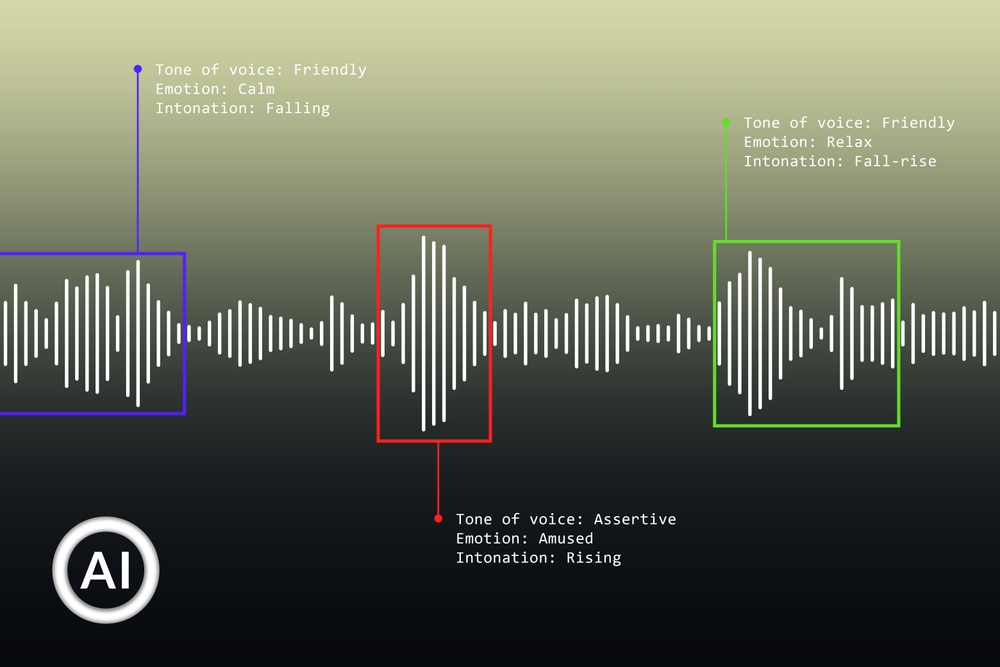

Sentiment Annotation for Speech Datasets

Sentiment analysis provides very valuable insights that can often drive business decisions. Pangeanic has a large amount of experience in building its own sentiment analysis tools. To extract sentiment, you must be a native speaker of the language and understand all the nuances and intricacies, for example ironic language. Our recordings can be annotated as positive, negative, or neutral to add more data to train an ML model that will be able to understand and provide its own insights on sentiments. Our PECAT text annotation tool speeds up all sentiment annotation tasks.

Challenges of Working with Speech Datasets

Working with speech datasets presents several challenges that are not typically encountered when working with other types of data. Some of the most significant challenges include:

Variation in speech patterns: People speak at different rates, with different accents, and in different contexts. These variations can make it difficult to train models that can accurately recognize speech in all situations

Noise and interference: Background noise, such as music or other people talking, can interfere with speech recognition. This noise must be filtered out to ensure accurate training, although some clients do request it to remain so that their systems understand to filter it out

Data labeling: Speech datasets must be labeled with metadata that provides information about the language spoken, the speaker's gender, and the topic discussed. This labeling process used to be time-consuming and labor-intensive but thanks to Pangeanic’s PECAT tool, annotation and labeling are becoming much simpler tasks

Best Practices for Working with Speech Datasets

To overcome these challenges, there are several best practices that researchers and developers can follow when working with speech datasets. Our speech team ensures that we:

Collect diverse data: To ensure that Machine Learning models can recognize speech accurately in all situations, it is essential to collect diverse speech data that represents a wide range of accents, languages, and contexts

Use high-quality recordings: Low-quality recordings can make it more challenging to filter out background noise and interference, so it is essential to use high-quality audio recordings and filter out the bad quality ones

Enlist human annotators: While automated tools can help label speech data, human annotators are often better at capturing the nuances of language and can provide more accurate labeling

Speech dataset is a critical component of AI training, particularly for applications that involve natural language processing. While working with speech data presents several challenges, following best practices and using the right tools and resources can help researchers and developers build accurate and effective speech recognition models.

Manage your recordings with PECAT

Do you have specific recordings to make?

With Pangeanic, not only can you create, manage and edit datasets hassle-free online with an easy drag-and-drop UI, but you can also monitor how our taskers are performing and recordings are progressing.

All speech data will be exhaustively cleansed and annotated as per your requirements so that your algorithms grow as accurate, as strong and wise as you want!

We are here to help you with

· Speech to Text

· Text to Speech

· Sentiment Annotation for Speech Datasets

Visit our page on Speech Data Annotation for more information on annotation.